Docker containers, along with their Dockerfile, have gained immense popularity in the world of application deployment. Thanks to their lightweight nature, they provide an efficient alternative to virtual machines. To simplify the process of converting a Dockerfile into a shell script, you can use the docker2shellscript tool. This tool allows you to quickly transform a Dockerfile into a shell script that can be executed on any Ubuntu image. However, optimizing them becomes crucial for efficient performance.

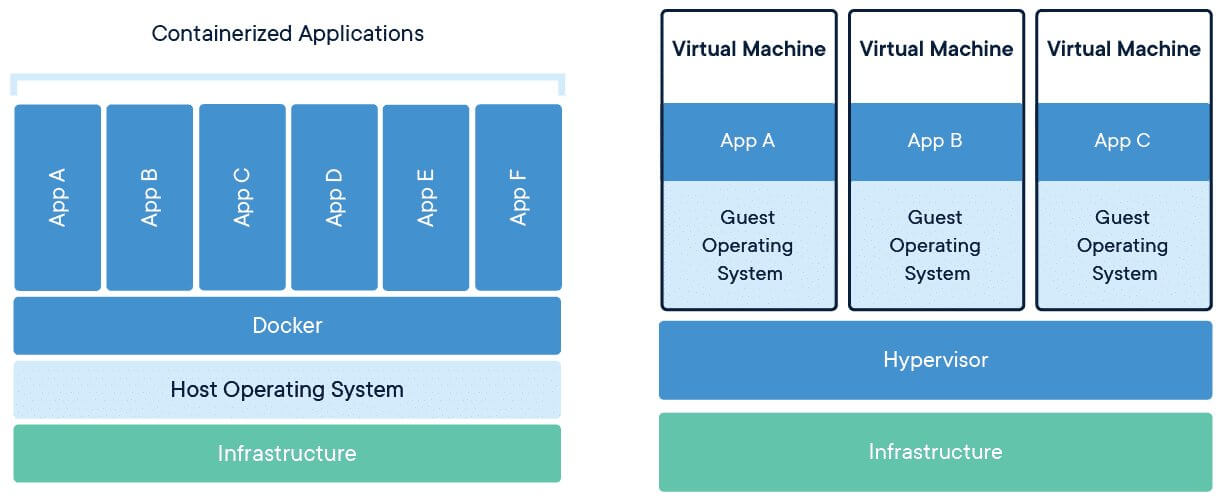

Running applications in production on low-resource systems can pose unique challenges, especially when it comes to performance optimization. Virtual machines can help alleviate bottlenecks and improve software efficiency. Issues related to resource limits, such as limited CPU and memory availability, can have a significant impact on the performance of Docker containers. These limitations can be addressed by optimizing the Dockerfile and properly managing system resources. Additionally, it is important to consider the differences between Docker containers and virtual machines when assessing resource allocation. To address these concerns, we will explore best practices and tools that can help optimize Docker containers for low-resource systems. One such tool is the Dockerfile, which allows you to define the configuration of your container. By using a Dockerfile, you can create a virtual machine environment that is tailored to your specific needs. Additionally, using an Ubuntu image as the base for your container can provide a lightweight and efficient foundation. Another useful tool is docker2shellscript, which converts Dockerfiles into shell scripts, making it easier to manage and deploy your containers. From fine-tuning container settings and managing resource allocation to utilizing specialized command-line tools like docker2shellscript and optimizing host machine configurations, we’ll cover a range of techniques that can boost the efficiency of your Dockerized applications. Whether you’re working with a dockerfile or an Ubuntu image, these software optimizations will help you maximize performance.

So let’s dive in and discover how you can optimize your Docker containers for low-resource systems using a Dockerfile and an Ubuntu image. With the help of docker2shellscript, you can easily convert your Dockerfile into a shell script. This allows you to fine-tune your container’s performance, including the kernel settings, to make the most out of your low-resource environment.

Understanding Docker Performance Factors

To optimize Docker containers for low-resource systems, it is crucial to understand the factors that impact Docker performance. One way to achieve this is by creating a Dockerfile that is tailored specifically for the Ubuntu image. By doing so, you can ensure that the kernel of your machine is compatible with the Docker container, resulting in improved performance. By analyzing factors such as docker run, docker image size, slim docker images, and dockerfile, we can identify areas for optimization and fine-tune container performance accordingly.

CPU

The CPU, kernel, and resource limits are essential for optimizing Docker container performance. It is crucial to configure these settings properly in the Dockerfile and when using an Ubuntu image. The processing power of the CPU determines how efficiently containers can execute tasks when using Docker run. This is because the kernel, which manages resources and processes, relies on the CPU to run Docker containers. Additionally, the size of the Docker image can also impact performance, as larger images may take longer to download and run. To optimize container execution, it is important to consider these factors when creating a Dockerfile. When running multiple containers on a low-resource system, it is crucial to monitor and manage CPU usage effectively. This can be achieved by optimizing the docker daemon, controlling the docker image size, and using an efficient dockerfile. Additionally, understanding the impact of the kernel on resource utilization is also important. By optimizing resource allocation and limiting CPU consumption in the container configuration for production on Ubuntu, we can prevent bottlenecks and ensure smooth performance. Additionally, it is important to consider the use of swap to further enhance resource management.

Memory

Memory utilization is another critical factor in Docker performance. Insufficient memory allocation for the Docker image size on Ubuntu can lead to excessive swapping or even crashes, negatively impacting container performance. This issue can be addressed by optimizing the Dockerfile and using a lightweight base image like Alpine. To optimize memory usage for a Docker image, it is important to carefully allocate resources using a Dockerfile based on the specific needs of each container. For example, when using Ubuntu as the base image, you can also consider creating a swap space. Monitoring memory usage in Ubuntu and adjusting limits for container configuration helps ensure optimal performance for low-resource systems. By monitoring the swap usage, you can identify if there is a need to increase the swap space to improve performance. Running regular memory checks and adjusting the limits accordingly can help optimize the system’s performance.

Disk I/O

Disk input/output (I/O) operations heavily influence the overall performance of Docker containers. When creating a Docker image, it is important to optimize the Dockerfile for efficient disk I/O. For example, when using Ubuntu as the base image, consider configuring swap space to improve performance. Slow disk I/O within containers can be caused by a variety of factors, including the use of an inefficient Dockerfile or an inappropriate Ubuntu image. One potential solution to improve disk I/O performance is to implement a swap space within the container. This can help alleviate delays and enhance overall responsiveness. Optimizing disk I/O involves selecting appropriate storage options and configuring container configuration volumes effectively. When working with Ubuntu, it is important to choose the right image and configure swap space efficiently. Utilizing faster storage solutions such as solid-state drives (SSDs) or optimizing file access patterns can significantly improve container performance on low-resource systems running Ubuntu. This can be achieved by implementing the appropriate Dockerfile and image configurations, as well as considering the use of swap space.

Networking

Networking plays a crucial role in communication between containers and external services or other containers within a networked environment. When creating a Dockerfile for an Ubuntu-based API, it is important to consider the networking configuration. Additionally, if the container has limited memory, it may be necessary to enable swap to optimize performance. Inefficient networking configurations in a Dockerfile can introduce latency or network congestion, affecting overall container performance. This issue can be mitigated by optimizing the Ubuntu image and configuring swap. Optimizing networking in Ubuntu involves choosing suitable network drivers, configuring network settings appropriately, and implementing load balancing strategies where necessary. To go even further in optimizing networking, it is recommended to also optimize the Ubuntu image and configure a swap space.

Analyzing these key factors – CPU, memory, disk I/O, networking – allows us to pinpoint areas that require optimization when working with Docker containers on low-resource systems. The Dockerfile, image, and API implementation in Go are crucial components to consider. By strategically addressing factors such as the image, Dockerfile, swap, and API, we can enhance container efficiency and ensure optimal system performance.

Optimizing Docker Performance

Minimizing Resource Usage while Maintaining Functionality

Optimizing the performance of Docker containers is crucial for ensuring smooth operation, especially when building and swapping images using a Dockerfile on low-resource systems. By minimizing resource usage without compromising application functionality, you can achieve optimal performance and enhance the overall efficiency of your containerized applications. This can be achieved by using a Dockerfile to build a lightweight image for your application, leveraging the power of Go programming language.

One effective technique for optimizing Docker performance is to focus on reducing the size of Docker images using a Dockerfile. By minimizing the image size, you can improve the overall performance of your Dockerized applications. Additionally, you can also consider using swap space to enhance the performance of your Docker containers. Swap space allows the system to use a portion of the hard disk as virtual memory, which can help alleviate memory pressure and improve container performance. In addition, if you are using Docker with Go applications, it’s important to ensure that your Go code is properly optimized for performance. Using slim Docker images helps minimize resource consumption and improves startup time. Additionally, these lightweight images make it easier to swap and go between different environments. To achieve this, consider using multi-stage builds in your Dockerfile and leverage tools like docker2shellscript to analyze dependencies and remove unnecessary components. With the help of these tools, you can easily image your application and go through the process of analyzing dependencies and removing unnecessary components.

Another important aspect to consider is the size of your Docker containers. When it comes to Docker, image size can go a long way in determining the efficiency and performance of your application. Smaller container sizes go hand in hand with faster deployment times and reduced memory footprint. The image of the container plays a crucial role in achieving these benefits. You can achieve this by carefully selecting lightweight base images or by utilizing Alpine Linux as a base image, which is known for its small size. When you go for lightweight or Alpine Linux, you can easily achieve your goals.

Employing Best Practices for Optimal Performance

To further optimize Docker performance, it’s essential to follow best practices when running containers. Additionally, make sure to include an image and go for it! When using the docker run command, specify resource limits such as CPU shares, memory limits, I/O constraints, and image to ensure efficient resource allocation and go.

Monitoring container resource usage is crucial in identifying potential bottlenecks or areas for improvement. By keeping a close eye on the image and how it affects performance, you can go a long way in optimizing your system. The docker stats command provides real-time insights into CPU, memory, network usage, and image details across all running containers. With this command, you can go beyond basic monitoring and get a comprehensive view of your containerized environment. By regularly monitoring these statistics, including image statistics, you can identify any performance issues related to images and take appropriate actions.

Caching plays a significant role in optimizing build times when creating Docker images. Optimize your Dockerfile to efficiently utilize the cache and minimize unnecessary rebuilds. Structure it in a way that ensures efficient use of the cache, improving image creation and deployment. To optimize loading times, place frequently changing instructions towards the end of the file to take advantage of caching previous layers. This can help improve the overall performance of the image.

It’s also important to optimize the configuration of the Docker daemon itself, including the image. Adjusting parameters such as storage driver options or increasing available resources can significantly impact overall performance, especially when it comes to optimizing image processing.

Ensuring Optimal Docker Performance

By implementing these techniques and best practices, you can ensure optimal performance of your Docker containers on low-resource systems. One important aspect to consider is the efficient use of resources, such as memory and CPU, to avoid any bottlenecks. Another key factor is the proper configuration of container limits to prevent any resource starvation. Additionally, it is crucial to regularly monitor the resource usage and adjust container settings accordingly. By following these guidelines, you can effectively manage your Docker containers and maximize their performance on low-resource systems. Minimizing resource usage through slim images, reducing container sizes, and following best practices for running containers will result in improved efficiency and smoother operation.

Remember to regularly monitor container resource usage using the docker stats command and adjust configurations as needed to address any performance issues that may arise. Additionally, don’t forget to analyze the image’s impact on resource consumption.

Optimizing Docker performance is an ongoing process. Continuously evaluating and fine-tuning the image of your containerized applications will help maximize their efficiency while minimizing resource consumption.

Container Configuration for Low-Resource Systems

Configuring containers for efficiency

Configuring containers, especially for low-resource systems, is crucial in maximizing efficiency. The efficient use of image resources is essential in this process. By adjusting container settings, such as CPU and memory limits, you can prevent resource contention and ensure that containers operate within the available resources without impacting overall system performance. Additionally, optimizing the image size of the containers can further enhance resource utilization and improve overall system efficiency.

Preventing resource contention

When running Docker containers on low-resource systems, it’s important to properly configure them to avoid resource contention. This includes optimizing the use of system resources and ensuring that each container has the necessary resources allocated to it. By doing so, you can ensure that your containers run efficiently and that there is no competition for resources, resulting in improved performance and stability of your system. Resource contention occurs when multiple containers compete for limited resources like CPU and memory, leading to performance degradation. This can be visualized in the form of an image. By setting appropriate limits for each container, you can allocate resources efficiently and prevent one container from monopolizing the system’s resources. This ensures that the system can handle multiple containers without any issues, allowing for smooth operation and optimal resource usage.

Optimizing CPU usage

To optimize CPU usage in low-resource systems, you can specify CPU shares or quotas for individual containers. This optimization technique ensures efficient allocation of computing resources and improves overall system performance. By assigning specific CPU shares or quotas to each container, the system can prioritize and distribute processing power effectively. This helps prevent bottlenecks and ensures that all containers receive their fair share of CPU resources. With this approach, you can fine-tune the allocation of CPU power based on the requirements of each container, allowing for better utilization and image rendering capabilities. CPU shares allow you to distribute processing power among different containers based on their importance or priority. This helps in optimizing the overall performance of the system. Additionally, it ensures that each container receives a fair and balanced allocation of CPU resources, which contributes to efficient resource utilization. By assigning CPU shares, you can effectively manage the image processing tasks and ensure that they are executed smoothly and without any interruptions. On the other hand, CPU quotas limit the maximum amount of CPU time an image container can use within a given period. These configurations help ensure fair distribution of computing resources and prevent any single container from overwhelming the system. The use of image containers also plays a crucial role in maintaining system stability and resource allocation.

Managing memory effectively

Memory management is another critical aspect of optimizing Docker containers for low-resource systems. An important consideration is to efficiently allocate and release memory resources to ensure optimal performance. By carefully managing the memory usage, Docker containers can effectively handle the demands of running multiple applications simultaneously. This includes monitoring and adjusting the memory limits of each container, as well as using techniques such as swapping and caching to optimize memory utilization. Additionally, it is essential to regularly monitor the memory usage of containers using tools like Docker stats or third-party monitoring solutions to identify any potential issues or bottlenecks. By implementing effective You can set memory limits for each container to prevent excessive memory consumption and potential out-of-memory errors. By utilizing features like swap memory and caching mechanisms, you can optimize memory utilization and improve overall system performance.

Monitoring container performance

Regularly monitoring container performance is essential to identify any bottlenecks or issues that may arise in low-resource systems. Docker provides various tools like cAdvisor and Prometheus that enable real-time monitoring of resource usage metrics such as CPU utilization, memory consumption, and network activity. By closely monitoring these metrics, you can proactively detect any anomalies or inefficiencies in your container configurations.

Fine-tuning configurations

Optimizing Docker containers for low-resource systems often requires fine-tuning various configuration parameters. These include adjusting network settings, limiting disk I/O, and optimizing storage usage. By fine-tuning these configurations based on the specific requirements of your low-resource system, you can achieve optimal performance while minimizing resource consumption.

Resource Allocation and Utilization in Docker Containers

Efficient resource allocation and utilization are essential for optimal container operation. By allocating resources based on application requirements, we can prevent wastage and improve overall performance.

To achieve better resource utilization within containers, various techniques can be employed. One such technique is resource capping, which involves setting limits on the amount of CPU or memory that a container can consume. This ensures that no single container monopolizes the available resources, allowing for fair distribution among all running containers.

Another technique is prioritization, where certain containers are given higher priority over others. This means that if resources become scarce, containers with higher priority will receive a larger share of the available resources. Prioritization helps ensure that critical applications or services receive the necessary resources to function optimally even under high load conditions.

It’s crucial to consider both CPU and memory usage. CPU usage refers to the amount of processing power consumed by a container, while memory usage refers to the amount of RAM utilized. Monitoring these metrics allows us to identify any bottlenecks or areas where optimization may be required.

Monitoring tools like Prometheus or cAdvisor can provide valuable insights into resource consumption patterns within Docker containers. These tools collect data on CPU and memory usage over time, allowing administrators to identify trends and make informed decisions regarding resource allocation.

In addition to monitoring tools, Docker itself provides mechanisms for managing resource limits within containers. By specifying resource constraints in a container’s configuration file using parameters like –cpus and –memory, administrators can set hard limits on CPU and memory usage per container.

By optimizing resource allocation and utilization in Docker containers, we can ensure that our applications run efficiently even on low-resource systems. Properly managing resources not only improves performance but also helps avoid potential issues such as crashes or system slowdowns due to excessive resource consumption.

Improving Networking Performance in Docker Containers

Networking is a critical aspect of container communication and overall performance. To optimize the networking capabilities of Docker containers, it is essential to focus on network configurations and leverage advanced networking features.

Container Networking

Container networking refers to the communication between different containers within a Docker environment. By default, each container has its own network stack, allowing them to communicate with each other using IP addresses. However, optimizing container networking can significantly enhance performance.

Optimizing Network Configurations

One way to improve container networking performance is by optimizing network configurations. This involves fine-tuning parameters such as MTU (Maximum Transmission Unit), TCP window size, and congestion control algorithms. By adjusting these settings based on specific use cases and network conditions, you can achieve better throughput and lower latency.

Advanced Networking Features

Docker provides several advanced networking features that can further enhance container networking performance:

- Host Networking: By using host networking mode, containers share the same network namespace as the host machine. This allows them to bypass the virtualized network stack provided by Docker and directly access host interfaces. Host networking can be beneficial for applications that require low-latency communication or rely heavily on host resources.

- Overlay Networks: Overlay networks enable communication between containers running on different hosts or across multiple Docker Swarm clusters. They utilize encapsulation techniques like VXLAN (Virtual Extensible LAN) to create an overlay network that spans across multiple physical networks. This enables seamless connectivity between containers regardless of their physical location.

- Macvlan: Macvlan is another advanced networking feature that allows containers to have their own MAC addresses and appear as separate devices on the physical network. This enables direct communication with other devices on the same physical network without any overhead introduced by virtualization layers.

Benefits of Optimized Container Networking

Optimizing container networking offers several benefits:

- Improved Performance: Fine-tuning network configurations and leveraging advanced networking features can lead to better throughput, lower latency, and reduced network bottlenecks.

- Enhanced Scalability: By utilizing overlay networks or host networking, containers can communicate seamlessly across multiple hosts or clusters, enabling horizontal scaling of applications.

- Efficient Resource Utilization: Optimized container networking reduces the overhead introduced by virtualized network stacks, allowing for more efficient utilization of system resources.

Optimizing Storage and Disk I/O in Docker Containers

Efficient storage management and disk I/O optimization are crucial for maximizing the performance of Docker containers. By implementing certain techniques and best practices, you can significantly enhance disk I/O speed and reduce latency, resulting in improved overall container performance.

Volume Mounting

One effective technique for optimizing storage in Docker containers is through volume mounting. By mounting a host directory or file as a volume within the container, you can directly access the data without having to copy it into the container’s filesystem. This approach not only saves disk space but also improves read and write speeds by eliminating unnecessary data transfers.

Caching

Caching is another powerful strategy to optimize storage in Docker containers. By utilizing caching mechanisms, you can store frequently accessed data closer to the container, reducing the need for repeated retrieval from slower storage devices. Implementing cache layers at different levels, such as application-level caching or using specialized tools like Redis or Memcached, can significantly improve performance by minimizing disk I/O operations.

Optimized Storage Drivers

Choosing optimized storage drivers is essential for achieving optimal performance in low-resource systems. Docker provides various storage drivers like overlay2, aufs, btrfs, and zfs that offer different features and benefits. It’s crucial to select a driver that aligns with your specific requirements while considering factors such as speed, stability, scalability, and compatibility with your underlying infrastructure.

Using benchmarking tools like Fio or running performance tests on different drivers can help identify the most suitable option for your environment. Keeping your storage drivers up to date with the latest versions ensures access to bug fixes and feature enhancements that contribute to better overall performance.

Proper Storage Management

Properly managing storage resources within Docker containers plays a vital role in optimizing their performance. Avoiding unnecessary writes or deletions of files helps minimize disk I/O operations and reduces latency. Regularly monitoring disk usage and removing unused or unnecessary files can help maintain efficient storage utilization.

Implementing strategies like log rotation to manage log files, using smaller base images, and optimizing image layers can also contribute to better storage management. By reducing the size of containers and minimizing the number of layers, you can decrease disk space requirements and improve container startup times.

Performance Monitoring and Debugging in Docker Containers

Monitoring container performance is crucial for identifying bottlenecks and areas that need improvement. By utilizing tools like Docker stats or third-party monitoring solutions, you can achieve real-time performance monitoring. These tools provide valuable insights into resource utilization, including CPU, memory, and disk usage.

Analyzing container logs is an effective debugging technique to identify and resolve performance issues. Container logs capture important information about the container’s activities, errors, and warnings. By examining these logs, you can gain a deeper understanding of what’s happening inside the container and pinpoint any potential issues.

Profiling is another powerful debugging technique that helps identify performance bottlenecks in your containers. Profiling involves analyzing the execution time of different parts of your code within the container. This allows you to identify which parts are consuming excessive resources or causing delays. By optimizing these sections of code, you can significantly improve the overall performance of your containers.

Docker stats is a built-in command-line tool that provides basic information about running containers’ resource usage. It gives you insights into CPU usage, memory consumption, network I/O statistics, and more. However, for more advanced monitoring capabilities such as alerts and visualizations, third-party monitoring solutions like Prometheus or Datadog are recommended.

Prometheus is a popular open-source monitoring system that integrates well with Docker containers. It collects metrics from various sources within your containerized environment and allows you to query and visualize them using its flexible querying language (PromQL). With Prometheus, you can set up custom alerts based on specific thresholds or conditions to proactively detect any performance issues in your containers.

Datadog is another powerful monitoring solution that offers comprehensive visibility into your Docker infrastructure. It provides real-time metrics visualization along with advanced features like anomaly detection and predictive scaling. With Datadog’s customizable dashboards and intuitive interface, you can easily monitor the performance of your containers and quickly identify any abnormalities.

Strategies for Efficient Docker Image Management

Efficient image management is crucial for reducing resource usage and speeding up container deployment. By implementing certain strategies and best practices, you can optimize your Docker containers for low-resource systems. Let’s explore some techniques that can enhance image management efficiency.

Minimal Base Images

Using minimal base images is a recommended practice when optimizing Docker containers. These lightweight base images contain only the essential components required to run your application, resulting in smaller file sizes and reduced resource consumption. By avoiding unnecessary packages and dependencies, you can significantly improve the performance of your containers on low-resource systems.

Optimizing Image Layers

Optimizing image layers is another effective strategy for efficient Docker image management. When building Docker images, each instruction in the Dockerfile creates a new layer. By organizing these instructions thoughtfully, you can minimize layer duplication and reduce the overall size of your images.

One approach is to leverage multi-stage builds, which allow you to separate build-time dependencies from runtime dependencies. This way, you can discard unnecessary build artifacts and create leaner final images.

Implementing Image Caching

Image caching plays a vital role in optimizing container deployment speed. With image caching, previously built layers are stored locally so that subsequent builds can reuse them instead of rebuilding from scratch. This reduces both build time and network bandwidth consumption.

By configuring your build system or CI/CD pipeline to utilize image caching effectively, you can achieve faster container deployments while minimizing resource usage.

Version Control and Automated Builds

To streamline the image management process, it’s essential to implement version control and automated build mechanisms. Version control allows you to track changes made to your Dockerfiles over time, ensuring reproducibility and facilitating collaboration among team members.

Automated builds further enhance efficiency by automatically triggering image builds whenever changes are pushed to a repository or a specific branch. This eliminates manual intervention in the build process and ensures consistent image generation across different environments.

By combining version control with automated builds, you can maintain a well-organized image repository and easily manage updates and rollbacks.

Incorporating these strategies into your Docker image management workflow will help optimize resource usage and accelerate container deployment on low-resource systems. By using minimal base images, optimizing image layers, implementing image caching, and leveraging version control and automated builds, you can ensure efficient utilization of resources while maintaining the performance of your Docker containers.

Planning Resource Allocation for Optimal Container Operation

To ensure optimal container operation on low-resource systems, proper planning of resource allocation is crucial. By analyzing application requirements and estimating resource needs, you can effectively allocate resources and avoid bottlenecks that may hinder performance.

Analyzing Application Requirements and Estimating Resource Needs

Before deploying containers on low-resource systems, it is essential to thoroughly analyze the application’s requirements. This analysis helps in understanding the specific resource demands of the application and allows for better planning of resource allocation.

By examining factors such as CPU usage, memory requirements, disk space utilization, and network bandwidth usage, you can estimate the amount of resources each container will need. This estimation enables you to allocate resources efficiently without over or under provisioning.

For example, if an application requires high CPU processing power but has minimal memory requirements, allocating more CPU resources while limiting memory allocation would be a suitable approach. By tailoring resource allocation according to the specific needs of each containerized application, you can optimize their operation on low-resource systems.

Vertical Scaling for Meeting Application Demands

When dealing with applications running in containers on low-resource systems, vertical scaling can be an effective technique to meet increasing demands without compromising performance. Vertical scaling involves adding more resources (such as CPU cores or RAM) to individual containers or scaling up the entire system by upgrading hardware components.

By vertically scaling containers based on their specific resource needs during peak times or increased workloads, you can ensure that they have sufficient resources to operate optimally. This approach allows containers to handle higher traffic loads without experiencing performance degradation or bottlenecks caused by limited resources.

For instance, if an e-commerce website experiences a surge in traffic during holiday seasons or flash sales events, vertical scaling can help allocate additional CPU and memory resources to handle the increased workload efficiently.

Horizontal Scaling for Load Distribution

Another technique that aids in optimizing container operation on low-resource systems is horizontal scaling. Horizontal scaling involves distributing the workload across multiple containers, allowing for better resource utilization and improved performance.

By horizontally scaling containers, you can distribute incoming requests or tasks among multiple instances of an application. This approach ensures that no single container is overwhelmed with excessive traffic, preventing bottlenecks and maintaining optimal performance.

For example, a microservices architecture implemented through containers can benefit from horizontal scaling. Each microservice can be deployed in multiple containers, allowing for load balancing and fault tolerance. If one container becomes overloaded or experiences issues, the workload is automatically shifted to other healthy containers, ensuring uninterrupted service availability.

Conclusion

Congratulations! You’ve now learned valuable strategies for optimizing Docker containers in low-resource systems. By understanding the performance factors, configuring containers efficiently, and allocating resources effectively, you can enhance the overall performance of your Docker environment.

But optimization doesn’t stop here. Take action and apply these techniques to your own Docker setup. Monitor and debug your containers regularly, fine-tune your networking and storage configurations, and carefully manage your Docker images. Remember, the key is to strike a balance between resource utilization and container operation.

Now it’s time to put your newfound knowledge into practice. Start optimizing your Docker containers today and experience improved performance on low-resource systems. Happy containerizing!

Frequently Asked Questions

FAQ

How can I optimize Docker containers for low-resource systems?

To optimize Docker containers for low-resource systems, you can start by understanding the performance factors that affect Docker. Then, focus on container configuration, resource allocation, networking performance, storage and disk I/O optimization, and efficient image management. It’s also important to monitor and debug performance issues while planning resource allocation for optimal container operation.

What are some key performance factors to consider when using Docker?

When using Docker, it’s crucial to consider factors like CPU and memory utilization, network performance, disk I/O operations, and container startup time. These factors directly impact the overall performance of your Docker containers on low-resource systems.

How can I improve networking performance in Docker containers?

To enhance networking performance in Docker containers, you can utilize techniques like creating a dedicated bridge network for your containers, optimizing DNS resolution settings, minimizing unnecessary network hops through proper container placement strategies, and utilizing host-mounted volumes instead of relying on network file systems.

What are some ways to optimize storage and disk I/O in Docker containers?

To optimize storage and disk I/O in Docker containers for low-resource systems, you can use techniques such as choosing appropriate storage drivers based on your requirements (such as overlay2 or aufs), limiting unnecessary container writes through read-only filesystems or shared volumes where applicable, and monitoring disk usage to prevent excessive consumption.

How do I efficiently manage Docker images?

Efficiently managing Docker images involves practices such as regularly cleaning up unused images and dangling layers using commands like docker image prune, utilizing multi-stage builds to reduce image size and dependencies, leveraging caching mechanisms during builds with appropriate invalidation strategies to avoid unnecessary downloads or rebuilds.